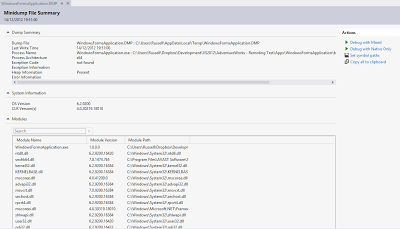

So what is IntelliTrace? Well, it's like memory dump analysis on steroids. However, unlike dump analysis where you can only see the state for frames within the stack, i.e. method entry and exit points, IntelliTrace can be configured to collect data at a far greater verbosity, down to the individual call level. This provides a debugging experience much closer to that of live debugging except that the state information has already been collected and you can't purposely, or indeed accidentally modify it.

Another great benefit of IntelliTrace, when you have it configured to do so, is the ability to collect gesture information. This means you no longer need to ask the tester or client for reproduction steps as all the information is right there within the .iTrace file.

IntelliTrace can also be incredibly useful for debugging applications that have a high cyclomatic complexity due to the client reconfigurability of the product. No longer do you need to spend an enormous amount of time and expense trying to get an environment set up to match that of the client's configuration; if it's even possible that is. As we are performing historical debugging against an iTrace file that has already collected the information pertaining to the bug, we can jump straight in and do what we do best.

One of the biggest criticisms people have of IntelliTrace however is the initial cost. The ability to debug against a collected iTrace file is only available in the Ultimate version of Visual Studio. True, this is an expensive product but I don't think it takes too long before you see a full return on that investment and indeed become better off, both financially and by gaining a strengthened reputation to respond quickly to difficult production issues. In the very least, I think it makes perfect economic sense to kit out your customer facing maintenance teams with this feature.

Now that IntelliTrace can be used to step through production issues, it has become a formidable tool for the maintenance engineer. Along side dump file analysis, we have the potential to make the "No repro" issue a thing of the past; only rearing it's head in non-deterministic or load based issues.